How to Go on Vacation Without Going

Everyone loves to show off some photos of their latest trip or family party. So, here I’ll share some to start off this week’s column. Never mind if you would rather not see my family’s party or my vacation — these aren’t those anyway. I’ve been under-the-weather and keeping my distance from folks. Here’s another secret though: they aren’t anybody’s.

I decided to go down the rabbit hole of machine learning (what we often call “AI” and is sometimes referred to as “ML” for short) image generation while I was feeling punk enough — and not in a 80’s way — that little meaningful was going to get done from me beyond the absolute necessities.

The idea of generating photorealistic (or not) scenes from a computer is hardly a new one. Growing up, there was a software store in town I loved to spend time in, often rooting around the bargain bin. One day my bargain bin search yielded a copy of VistaPro, which looked intriguing and, happily, was. An early 3D landscape generator, it could create near photo-like mountains, though was much more hard-pressed if you pushed it to put trees on hills.

Still, at the time, I found it enchanting: you could enter in some parameters and out came an amazing image of a place that doesn’t exist. Amazing for the time, but no one would be fooled into thinking it was real.

We’ve come a long way since then.

Stepping away from generating the non-existent for the moment, most of us are growing accustomed to “machine learning” (what we often call “AI”) playing a role in a lot of things we do nowadays: take a picture with a modern cell phone, and it applies machine learning to figure out which of several different images looks best — or even segments thereof — and how to process it best to create a really good photograph. The results are often better than my dedicated camera can produce through more traditional means. Of course, some variation thereof also powers “personal assistants” like Alexa and Siri, and the various “intelligent” suggestions we see on our phones and tablets, though often times those feel more like proof of unintelligence.

A few years back, a ThisPersonDoesNotExist.com came online demonstrating a machine learning model for generating people’s faces. Refresh that page a few times — it becomes almost a compulsion — every face it shows you is one generated by a computer, a person who appears real, for whom I can imagine a whole life’s story behind, for which there is no story because the face is a mere computer generation.

(And, because this is the Internet, then stop by ThisCatDoesNotExist.com, because of course, we should spend time generating fake cats. Memes of the world, take note.)

More recently, the whole process has gotten even more fascinating. It’s one thing that a computer or phone can sort out elements of images to create a single, better, composite image. Or be trained to create new, life like simulations of one thing (such as face) after being fed tons of examples on what goes into a face’s appearance.

Those are impressive and highly usable things, but what about creating “photos” or “paintings” out of essentially thin air — mere words, like I might give to an artist? DALL-E, perhaps most of all, took the world by storm last year with the idea that an AI generator could take a text “prompt” — what we might think of as a photo caption — and turn it into a “photo” or piece of “artwork.”

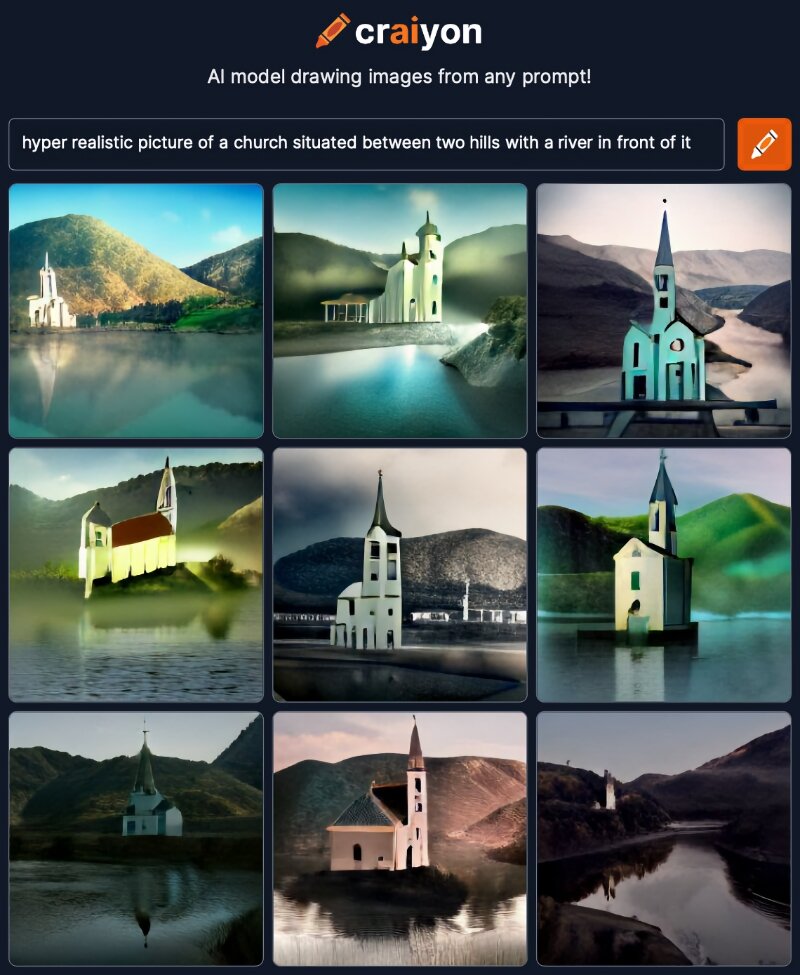

DALL-E only has limited availability, with a waitlist sitting between me and trying it at present, but for those of us tired of waiting, Craiyon appeared as a clone that took the Internet by its own storm. It’s fun and free (for non-commercial use) to play with, but certainly would fool absolutely no one into thinking its pictures, at least of people, are real.

We are still at the stage of trying to figure out who owns the rights to materials generated by AI. This will likely get messier before it is over, but for now: no matter how creative your prompts, the results are not yours to do with as you please — you can’t sell your “creations.”

These recent services are interesting, but none of them fulfill the promise of my long ago copy of VistaPro: I still couldn’t put any of them on my computer and do just whatever I want with the results. Which is what led me to the promising, wild territory of Open Source machine learning.

Though it isn’t nearly as easy as simply dropping by a web site or downloading an app onto your phone, it shows just how powerful some of these tools are becoming and — intriguingly — how accessible they are to anyone with a moderately powerful computer and the willingness to roll up one’s sleeves and dig into that computer a bit.

My journey took me to the ML sensation of the moment, Stable Diffusion, which was released just a few weeks ago onto GitHub. (GitHub is the ubiquitous, Microsoft owned site for sharing open code, a giant presence for those working on code, but not likely to be familiar otherwise.)

GitHub specializes in people experimenting with other people’s code in a collaborative fashion and Stable Diffusion started a whole new string of so-called “forks” from people tweaking it for different uses. I settled on is legendary Perl contributor Lincoln Stein’s fork. Stein’s variant is notable for adding two different ways of interacting with the tool that are friendlier than entering commands into a terminal window (something non-techies gave up doing decades ago and some of those reading this probably have never done).

Thanks to the work of those who contributed to this fork, once Stable Diffusion is configured, running a simple command starts it up on one’s own computer and, beyond that initial command, everything else can be done as if it were a plain, old web site.

That’s once it is setup, though, so don’t get too excited just yet.

While these tools are incredibly accessible now compared to just months, much less years, ago, you aren’t going to just pop on the App Store to download it. First, I had to prep my Mac to handle it (similar steps apply on Windows, while Linux users will have much of this already built in).

Any computer user can embark on these sorts of steps for free nowadays, but if you aren’t used to tinkering with your computer’s configuration, it may seem a tad intimidating. If you didn’t know about terminals and command prompts when I mentioned them a moment ago, no time like the present to learn.

For a Mac user, it looks like this:

Install the core software development environment (XCode), the one part that will come from the App Store.

Install Homebrew, a tool for Macs made to help ease the installation of the sort of command line tools that come built in on Linux. We need this to obtain Python, the programming language used for many of these ML tools. Recent versions of MacOS have dropped out-of-the-box inclusion of Python (a mixed blessing, since often the included version was out-of-date anyway).

Install Miniconda, to enable grabbing the rest of the Python-related tools. (This step proved optional, but much simpler than the manual approach I took initially.)

All of these steps are summed up more efficiently than I initially accomplished them in a document attached to the Stable Diffusion fork I settled on. (Go with “option 2” when presented with two different routes in the instructions — it’ll save time and space for now.)

One caveat I learned after a few false starts: the standard steps for installing Stable Diffusion and its related tools gave me an old version of PyTorch — the machine learning library essential to the whole endeavor — which does not natively support Apple Silicon computer’s graphic processors. Most machine learning seems to be happening on very expensive nVidia graphics card-powered workstations, but Apple has been making a point of its latest wares being competitive — especially in machine learning — with many of those cards, if software supports Apple’s hardware features.

Curious not just to see if I could get these tools to run, but to see how quickly they could run on an M1 Mac, I found the steps to download a still in development version of PyTorch that takes advantage of Apple Silicon’s GPU. Older, Intel-based Mac users can skip this step and, I’d guess within weeks, so too will those using newer Macs.

If you’d like that cutting edge version on a Mac, this is the command you’ll need to run in Terminal after getting Miniconda installed, but before doing the other parts of the Stable Diffusion instructions:

conda install pytorch torchvision torchaudio -c pytorch-nightly

If you are reading this more than a week or two past publication date (September 7, 2022), I’d recommend stopping by the Conda web site to check if this is still necessary. Right now, when you select “Preview (Nightly) > Mac > Conda > Python > Default” in the selection table, it notes “MPS acceleration is available on MacOS 12.3+.” If that note disappears, it’s safe to say you can jump on the stable release instead, just as the overall Stable Diffusion instructions suggest.

With the base configured, Stable Diffusion is finally ready to be given a spin. Before doing that, I did follow the extra instructions to set up GFPGAN and Real-ESRGAN, which allow further enhancement of the generated images (and are intriguing in their own right). Stein’s documentation explains how to do that.

If you’ve gone that far, I’d recommend you do those extra steps. They help a lot in trying to encourage a departure from the horror-movie quality of faces generated by a tool like Craiyon. All said and done, with some wrong turns, a few hours in, I’d settled on this particular variant, tweaked how I installed it and was ready to go.

In my instance, going into the codebase’s directory in Terminal and using this command yielded the most agreeable configuration and one that could be accessed in my web browser at http://127.0.0.1:9090:

python scripts/dream.py --gfpgan_dir ../gfpgan/ --web -F

So what did I get for all of this trouble? My vacation I never went on and the party I never attended. Beautiful nature vistas and whimsical artwork. Not everything it produces is particularly good, but perhaps the shocking thing is that a great deal of it is good. It can even, with a bit of coaxing, bring to life inside jokes such as new mythical creatures or the preview of a new Pixar movie that will never exist.

Some of this is mere fanciful fun, though there are plenty of ways one could imagine everything from tiny publications like OFB to small businesses to churches to individuals making use of these interesting, unique creations in lieu of either paying for stock photography or searching for free-to-use imagery. (And hoping that free imagery really is — because there is a whole world of copyright trolls that try to trick users into thinking an image is free to use and then seeking to extort exorbitant license fees, something I wish I didn’t know from personal experience.)

Of course, ML imagery also raises questions. With these models rapidly improving, their malicious use to make people appear to have been, to have done or to have said things they never did is looming ominously. Others have questioned the ethics of drawing large heaps of data from the Internet, including much from professional artists and photographers, in a project that may soon endanger the livelihoods of illustrators and stock photography producers.

Those issues will have to wait for a future column. Some of those issues may be resolved well. Others may be equivalent to horse drawn carriage companies railing against automobiles: futile opposition to the merciless push forward of technology.

What is clear, and exciting, though is that with the open nature of much of this development, these are tools the average person can play with, use for actual work and contribute to the innovations around. Paired with computers, like the entire line of Apple’s M1 and M2 systems, which have machine learning accelerators (the “Neural Engine”) built in, we are in an exciting moment that isn’t left just to those with massive data centers to chew on information.

In a way that still seems almost like science fiction, Machine Learning has arrived. And so too did my vacation I never went on.

Timothy R. Butler is Editor-in-Chief of Open for Business. He also serves as a pastor at Little Hills Church and FaithTree Christian Fellowship.

You need to be logged in if you wish to comment on this article. Sign in or sign up here.

Start the Conversation